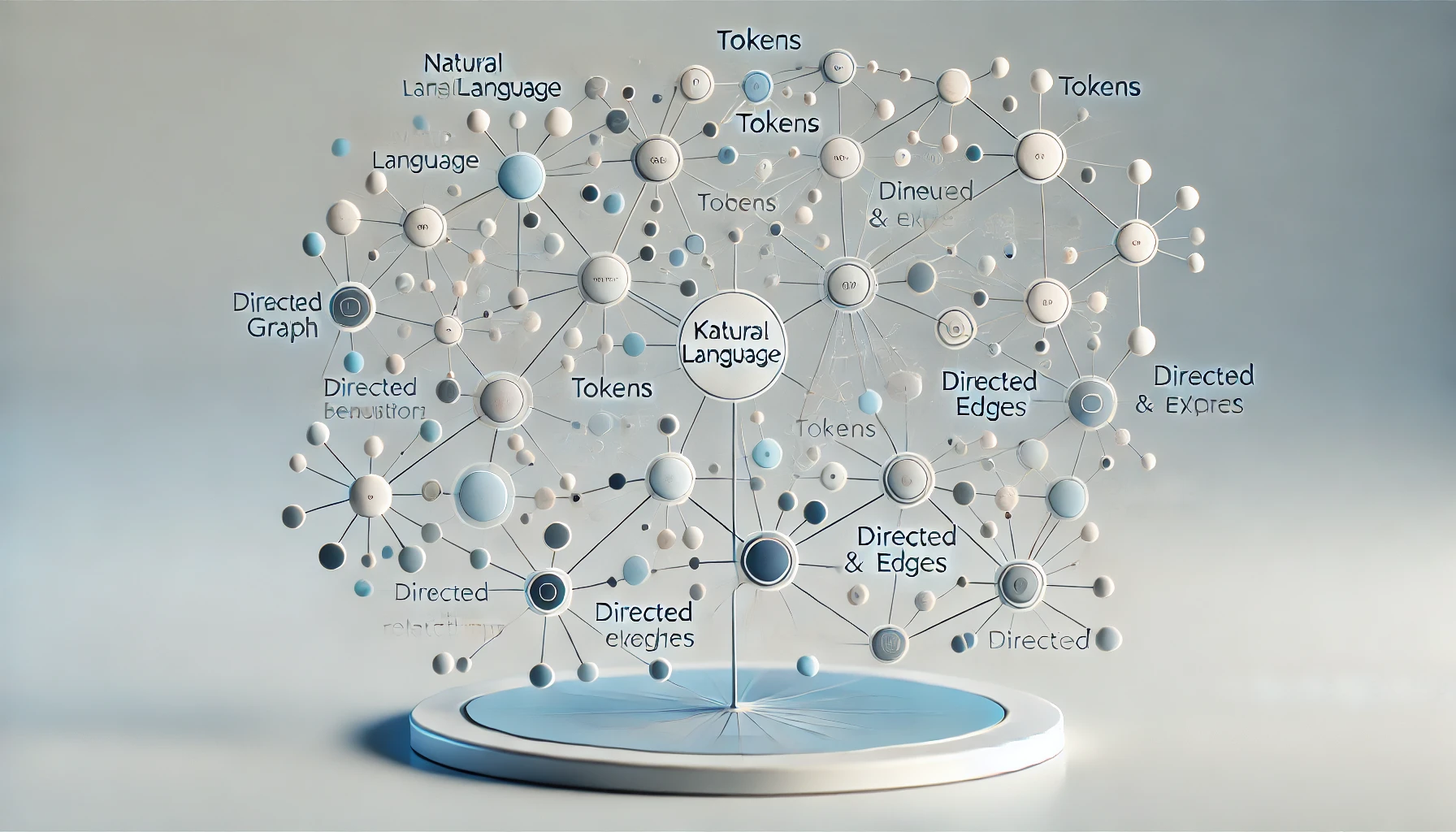

What Is a Knowledge Graph? A Practical Guide Across RDF and Property Graphs

Formal basics, real systems, and why industry favors property graphs while RDF remains important

By Taewoon Kim

Motivation

[Read More]